Microsoft Dynamics 365, MYOB Advanced, SAP Business One Cloud ERP

Cloud ERP software such as MYOB Advanced, SAP Business One, Microsoft Dynamics 365 Business Central provide a single solution that empowers your business and connects your team. Minimise repetitive tasks, deploy the foundation for automation and give time to invest in high valued tasks that increase overall business productivity and efficiency. It makes sense!

One team able to assist your review and compare for Microsoft Dynamics 365 Business Central, MYOB Advanced and SAP Business One.

LAUNCH PARTNER 2023

Dynamics 365 Business Central Retail

Cloud Factory is delighted to be appointed to support NP Retail from NaviPartner for the ANZ market. NaviPartner has a long history as a Microsoft Dynamics 365 partner in the Danish market and as such close to the worldwide center of Dynamics 365 Business Central.

Their many years of expertise have led them to funnel their knowledge of their clients’ needs and challenges into a strong product innovation focus. The result is a well-documented and tested omnichannel solutions, namely NP Retail, NP Ecommerce, NP WMS and NP Entertainment.

.png?width=500&height=298&name=MYOB-Advanced-financial-management%20(5).png)

LAUNCH PARTNER 2015

MYOB Advanced Consultants

Cloud Factory has been providing MYOB Advanced consulting and implementation services in Australia since 2014. We were the launch partners for MYOB Advanced in Melbourne and have implemented MYOB Advanced for various small to medium businesses.

Our implementation and support team consist of certified MYOB Advanced consultants who are among the most knowledgable consultants in Australia. Every business who implements a new ERP system in their organisation initially has many questions and require ongoing support. Our MYOB Advanced support team will provide you with MYOB Advanced ongoing support after the implementation.

LAUNCH PARTNER 2005

SAP Business One Consultants

Cloud Factory has been providing SAP Business One consulting and implementation services in Australia since the launch for Australia. In fact, we were the launch partners for SAP Business One in 2005 and have implemented SAP Business One for various small to medium businesses.

Our team consists of several SAP Business One certified Consultants who can ensure you are current for all software updates. Our projects for SAP Business One are class leading and have been internationally recognised. We provide services throughout Australia. We understand that learning your way around the system and keeping up with updates can be challenging for most businesses.

Therefore, after implementing your new ERP software, we also provide SAP Business One support with the help of our support team who are online and only a phone call away if you need help. Lastly, our User Group was formed in 2007 and we can even introduce you to the first Chair of that group meeting!

LAUNCH PARTNER 2018

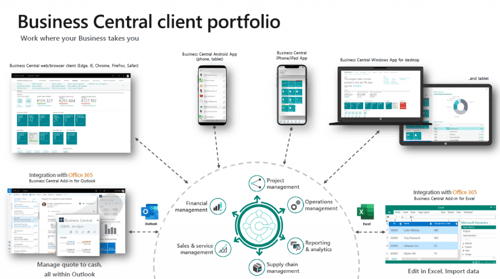

Microsoft Dynamics 365 Business Central

Cloud Factory have been delighted to assist legacy ERP client migrate to Microsoft Dynamics Business 365 Business Central along with various point solutions such as Retail, Warehousing and Manufacturing working alongside the best partners in the world, such as NaviPartner, InsightWorks and Tasklet.

We also implement and support KeyPay Payroll (now Employment Hero) for Dynamics 365 Business Central. We have also teamed with WIISE to bring their various enhancements for clients, namely Bank Feeds, Enhanced Landed Cost's, Integrated Payroll and Rostering as well as a specific version for registered Not For Profit and Charities.

LAUNCH PARTNER 2007

Korber Warehouse Management Edge

K.Motion Warehouse Edge (previously Accellos One Warehouse, Radio Beacon WMS and HighJump) is the WMS designed to enable SMBs to drive rapid, out-of-box warehouse optimisation and efficiency.

Warehouse Edge delivers a powerful, scalable, and flexible real-time WMS to streamline your business processes and help you achieve increased accuracy, throughput, and visibility into warehouse operations. The WMS software serves as a hub for all of your supply chain needs, satisfying the diverse business requirements of a broad range of industries.

-2.jpg?width=500&height=281&name=Koerber-Warehouse-Management-(1)-2.jpg)

Where are you on your ERP system review?

From Accounting to a Business Platform.

You most likely started the business with Xero and then replaced a spreadsheet with a 3rd party solution for inventory, then added a CRM solution and finally you now need a reporting system to provide reporting across these various cloud solutions? Is it getting too much to manage and discrepancies between Finance and Inventory are now worrying the business?

It's clearly time to consider a final change and implement a single end to end Business Process Platform.

Replacing a legacy based ERP with a modern Cloud ERP solution?

A modern Cloud-based ERP system (or Business Platform) typically provides a comprehensive set of business management functions and remains flexible, scalable and adaptable as business needs change.

Cloud Factory solutions can include personalised Business Intelligence to work with applications designed for your industry, enabling a real-time view of your business. To improve workforce productivity, it also has a familiar and consistent modern user interface and is easy to use.

Cloud ERP is accessible anytime, anywhere, through any device with a browser. In fact we believe that MYOB Advanced, SAP Business One and Microsoft Dynamics Business Central are more powerful solutions today than a mid-large sized ERP of 20+ years ago.

Why not workshop the solutions to see if what we believe is right?

WHO ARE WE?

Our Skills, Expertise and Project Commitment

With customers located in many different parts of Australia, our team is always delighted and ready to help you implement an on-premise or Cloud solution.

Australia's leading ERP systems for small to mid-size business

![]()

The SAP® Business One application offers an affordable way to manage your entire business – from sales and customer relationships to financials and operations. Learn more below

![]()

Business Central enables companies to manage their business, including finance, manufacturing, sales, shipping, project management, services etc. Business Central is fast to implement and easy to configure.

Cloud Factory is the first partner company to launch MYOB Advanced in Australia 5 years ago.

Learn more about MYOB Advanced and decide if it is the best fit for your company and your team.

Koerber K.Motion Warehouse Edge, formerly HighJump was awarded a Leader in Gartner Magic Quadrant for Warehouse Management. Learn more about this exciting highly featured Warehouse Management System and the various capabilities.

What Our Clients Say

With all our data now in one place, it means we can proactively communicate with customers if we need to. We can serve customers better. With a strong database, excellent reporting and operations which run smoothly the business is positioned to scale further.

The upgrade itself is quite straightforward – but the trick is to invest your time, energy and attention into the preparation and the testing. We wanted to benefit from numerous improvements and updates in the 9.2 version of the software. At the same time, we had several other justifications, such as eliminating outdated programs which were not compatible. While you may think 5 weeks or whatever is heaps of time, the deadline creeps closer and before you know it, the upgrade is happening. Professionally, Cloud Factory's team was very good. Once we had the upgrade locked in, the guys were here and knocked over a whole lot of stuff in just two days. The implementation took place while I went away for a long holiday. However, Cloud Factory took care of the launch so well I didn’t have a single call about the upgrade whilst away.

It was a long day... longer than I expected... but at every step, I was impressed with the attitude Cloud Factory's team, skill level and overall professionalism. There wasn't a problem they couldn't find a solution for.

Again it reinforces in my mind what a good decision it was for us to move to Cloud Factory. Congratulations on having such great assets in your team!

Visibility across the supply chain, across multiple batch codes and numbers, is also far better than it was before. Previously, we could only drill into one product at a time – now we can do the whole lot in one go, which makes inventory control far easier. The system has been up and running for several months and it is performing as expected. But the best benefits are yet to come. This business has grown rapidly as we have established a ready market for our products and it is going to grow much further. Most of our customers themselves run SAP. In time, we’ll look to integrate our supply chain with those of our customers – and most importantly, we have the headroom with Business One to grow as much as we like without having to think about changing the ERP system.

The time had come to upgrade to an ERP to manage the growing business – and due to operating across several sites, it was essential that any solution they chose would allow them to work remotely.

After undertaking an extensive needs analysis supported by the Cloud Factory Team the Woolcock Group decided on MYOB Advanced as it could perform all their required tasks and was considerably less expensive than so-called leaders in Cloud ERP deployment capability.

Since implementing MYOB Advanced, the team at the Woolcock Group have been able to streamline several processes and save an enormous amount of time on reports and consolidation.

In addition to this, the ability to access their accounting files through a web browser has meant the flexibility to work from anywhere, making managing all the separate businesses much easier.

Cloud Factory's Senior Lead Consultant - ERP has been extremely responsive, always helpful and if I am honest has probably saved our business from falling into a heap during our transition.

We have not only changed IT systems, but phones, staff, freight services, accounting etc and not one of these other aspects have been dealt with by the external parties as well as Cloud Factory has helped us.

Too often people are quick to criticize and praise is hardly ever heard, so I wanted to take this chance to give it when due.

Cloud Factory - thank you...

Good in-depth coverage of product and excellent knowledge was shared at the training for MYOB Advanced by one of the Senior Lead Consultant - ERP at Cloud Factory. Summary handouts of the course material were handed out during the session which was relevant to users. Good training and good training environment were presented for the learners.

The training sessions with one of the Senior Lead Consultant - ERP was very helpful and it helped speed up the current process. We recommend this training for everyone in our finance department.

I have been liaising with the MYOB Product Manager at Cloud Factory in regards to an unload issue with Woolcock imported invoices.

I feel it necessary to reflect in writing the outstanding customer service he has been providing me today and yesterday. It’s not often I come across such thorough and proactive support. I would like this email to be passed on to his management.

Thank you Cloud Factory, you have put my mind at ease and I truly appreciate your fantastic customer service..!

I would like to state that your emails are the clearest, concise, and organised responses I’ve seen during my time “here”. When I see replies from you I think HOLY MOLY how awesome is this guy, then I proceeded to show a few of my colleagues here.

It was lovely to attend the User Group Event yesterday to learn the updates, tips and the hints to make better use of the system. Meeting everyone in Cloud Factory and the MYOB Advanced Developers and other MYOB Advanced customers and communicating with ideas and queries makes this meeting very informative. Thank you for the invitation and organisation.

We would like to express our thanks with your help especially to the product team on the migration and continuous support to the system. We have been using MYOB Advanced for 13 months now, our team is getting better use of the system and more efficient in saving double entries, the ability to work in multi-currency conversion etc since having this ERP system. An excellent takeaway from yesterday is there are much more functions on reporting, projects, add-on apps that can be utilised and to be explored to help with the business. And I will start following the blogs that Johnathon wrote up.

On behalf of the team at Interchem Pty Ltd we’d like to wish you and your family a very Merry Christmas and thank you for your continued support. We look forward to working with you in the new year.

Cloud Factory Success

+

Years in Business

Cloud Factory has been working in the ERP Industry for the past 28+ years.

+

Years of consulting experience

Our team has +230 years of consulting experience all together, making us one of the ERP implementation experts in the industry.

+

Client Licenses

We have provided 1000+ client licenses in our 28+ years of experience in the ERP Industry